Sudoku solver

In this article I’ll share my sudoku solver and the thought process that went into it.

A comparison with Peter Norvig’s solver will follow, as well as what I learned from studying it.

The code blocks in the text are simple illustrations and aren’t mandatory to follow the article. Unless you really want to be exposed to it, you should probably skip them.

All the code shown here is available on my gitlab. You may also like Peter Norvig’s essay on his own solver.

My approach

Concept inventory

I tried to approach the task the same way Peter Norvig does in his CS 212 class and made a concept inventory. I found that it helps thinking about the bigger picture and coming up with useful functions that reduce repetition. I needed:

- A way to represent the grid.

- A function to print the grid.

- A place to store candidates

- Strategies to assign numbers to a square

- A way to check that the grid is solved

This was my first draft. The list expanded after I started working on it.

Basics

I chose a 2D array for the grid. It’s how I see the grid and it would be easy to print out. It works, but as I’ll discuss later maybe those aren’t the best criteria for choosing a data structure. (Note: a puzzle input is a string of lines with ‘0’ or ‘.’ for blanks)

def grid(puzzle):

"""Returns a 2D array representing the grid with '.' for blanks"""

sudoku_grid = []

for line in puzzle.replace(' ','').replace('0', '.').splitlines():

sudoku_grid.append(list(line))

return sudoku_grid

I used a dictionary of (coordinates_tuple) : list_of_numbers for the candidates. Again, it works, but why not store them in the initial array? My reasoning was that I’d probably be printing the grid while debugging and having the candidates would make it awkward to read. While true, I could have just changed the show_grid function to take that into account.

def initialize(puzzle, height, width, candidates):

"""Write down every candidates for every single square on the board"""

for y in range(height):

for x in range(width):

if puzzle[y][x] == '.':

candidates[(y, x)] = possible_numbers.copy()

return candidates

A grid is solved if each region (row, column, square) is a set of all digits from 1 to 9. I could also just check that the candidates dictionary is empty, or that there’s no ‘.’ in the puzzle array. I stuck with this definition because I felt it would be more robust, in case I introduced bugs in other parts of the code that led to assigning invalid numbers.

def is_solved(puzzle):

"""Return whether a grid is solved"""

return all([set(''.join([puzzle[y][x] for (y,x) in region])) == possible_numbers for region in all_regions])

(As an example of expanding the concept inventory, this meant I needed a way to generate all regions)

Strategies

This was the crux of the task. I thought about how I solved Sudokus, and implemented the following strategies first because they seemed the easiest to code.

- Single position: Within a region, look for a number that can only be in one cell

- Single candidate: Look for a cell that only has one candidate in it

def single_candidate(puzzle, candidates, changed = False):

"""Check the candidates for all unassigned squares.

If there's only one candidate, fill that square with the number"""

deletion_list = []

for (y,x) in candidates:

influencers = set([puzzle[j][i] for (j,i) in get_influencers(y,x) if puzzle[j][i] != '.'])

candidates[(y,x)] -= influencers

if len(candidates[(y,x)]) == 1:

puzzle[y][x] = list(candidates[(y,x)])[0]

changed = True; deletion_list.append((y, x))

for square in deletion_list: del candidates[square]

return puzzle, candidates, changed

def single_position(puzzle, candidates, changed = False):

"""Within a row, column or 3x3 square, look for a number that has only one candidate.

Fill the square that has this candidate with the number"""

for region in all_regions:

found = set([puzzle[y][x] for (y, x) in region if puzzle[y][x] != '.'])

searching = possible_numbers - found

region_candidates = [candidates[(y, x)] for (y, x) in region if puzzle[y][x] == '.']

for number in searching:

if sum([list(region_candidate).count(number) for region_candidate in region_candidates]) == 1:

for (y, x) in region:

if puzzle[y][x] == '.' and number in candidates[(y, x)]:

puzzle[y][x] = number

changed = True; del candidates[(y, x)]

return puzzle, candidates, changed

I kept track of whether or not I made a change using those functions and looped through them while changes were made. If no change was made, I would check whether the puzzle was solved or not.

Of course, these strategies are basic. You can’t solve many Sudokus with them. There are many more strategies, including guessing, which can be used. At this point I got into my head that I should try to code a program that solves Sudokus without guessing, because it felt much more elegant to me.

So I implemented other strategies (names from here): candidate regions, hidden groups, naked groups…

With each strategy added, the program would solve more difficult puzzles, but the speed would be pretty significantly reduced.

The issue is that for every strategy, I’m always iterating through everything, or almost everything.

Again, I was coding this thinking about how I would approach difficult Sudokus: look for places where an advanced strategy can be applied, and use it.

Giving up and guessing

I realized that trying not to guess was silly. I would never be absolutely sure the program could solve any grid without guessing, and it would probably be faster to just guess than to use all the advanced strategies the way I was doing it.

I thought the best way to guess was to pick a square with the least number of candidates, choose one at random and try to solve it this way, recursively. This approach is called Depth-First Search (DFS). You should make a copy of you current puzzle state so that you can just discard any unsuccessful guess. Unfortunately, with my use of a 2D-array this requires deepcopy, which works but is inefficient.

def solve(puzzle, candidates, height = 9, width = 9, changed = True, guessing = 0):

candidates = initialize(puzzle, height, width, candidates)

while changed:

changed = False

puzzle, candidates, changed = single_candidate(puzzle, candidates, changed)

puzzle, candidates, changed = single_position(puzzle, candidates, changed)

if not changed: candidates, changed = naked_groups(candidates, changed)

if not is_solved(puzzle):

if any(len(candidates[(y, x)]) == 0 for (y, x) in candidates) or not candidates:

if not guessing: print('This puzzle cannot be solved')

else: return False

choose = min(candidates.items(), key = lambda x:len(x[1]))

y, x, choices = choose[0][0], choose[0][1], list(choose[1])

for choice in choices:

puzzle_copy, changed_copy = copy.deepcopy(puzzle), True

puzzle_copy[y][x] = choice

puzzle_copy = solve(puzzle_copy, {}, height, width, changed_copy, guessing+1) # I initially kept track of how many guesses where made for curiosity

if puzzle_copy:

if is_solved(puzzle_copy):

return puzzle_copy

return puzzle

I limited the advanced strategies to the naked groups one after doing some timings because I found that it helped solve hard puzzles faster and didn’t make much of a difference for easier puzzles.

At this point I was confident my program could solve any valid Sudoku, and it seemed reasonably fast to me (solving evil sudokus from sudoku.com in under 0.02 secs), so I went to check Peter Norvig’s solver.

Peter Norvig’s approach

DFS is also used by Norvig when his strategies have failed to solve the puzzle. Before resorting to DFS though, he uses a different approach, outlined here:

Those with experience solving Sudoku puzzles know that there are two important strategies that we can use to make progress towards filling in all the squares:

(1) If a square has only one possible value, then eliminate that value from the square's peers.

(2) If a unit has only one possible place for a value, then put the value there.

These are similar to my single_candidate and single_position strategy. But while both of mine focus on assigning a value in certain conditions, his first one focuses exclusively on eliminating candidates. This focus on elimination is important, because as Norvig puts it later:

It turns out that the fundamental operation is not assigning a value, but rather eliminating one of the possible values for a square

This is absolutely correct, but it was counter-intuitive to me. I think about where numbers can go primarily. Where they can’t go doesn’t register as a step in my thinking process, at least in the simplest strategies, because it is automated. I do consider it in the more complex ones, but I ended up not using those strategies anyway!

The reason this is important is because assigning a number will automatically update the possible values for its peers. Again, I’ll quote Norvig:

These updates to [a cell] may in turn cause further updates to its peers, and the peers of those peers, and so on. This process is called constraint propagation.

This constraint propagation is a tree of operations. The number of branches can quickly get unmanageable for humans, and I’d be surprised if this was strictly applied by anyone when solving Sudokus. You can definitely follow a few levels of propagation, but you’re bound to forget one at some point. Then you’re back to systematically searching for places where you can apply a strategy.

Forgetting is not such a problem for computers, however! Using a constraint propagation strategy means that you’ll limit the number of fruitless checking. Intuitively, this should be much better than my approach overall, and most importantly in the harder sudokus.

We can test that assumption using the sets of puzzles Norvig himself used to time his program. I was surprised that my code runs faster on average on easy puzzles, but I’m indeed nearly 10x slower on the harder ones!

It took me a bit of time to properly understand his code.

################ Constraint Propagation ################

def assign(values, s, d):

"""Eliminate all the other values (except d) from values[s] and propagate.

Return values, except return False if a contradiction is detected."""

other_values = values[s].replace(d, '')

if all(eliminate(values, s, d2) for d2 in other_values):

return values

else:

return False

def eliminate(values, s, d):

"""Eliminate d from values[s]; propagate when values or places <= 2.

Return values, except return False if a contradiction is detected."""

if d not in values[s]:

return values ## Already eliminated

values[s] = values[s].replace(d,'')

## (1) If a square s is reduced to one value d2, then eliminate d2 from the peers.

if len(values[s]) == 0:

return False ## Contradiction: removed last value

elif len(values[s]) == 1:

d2 = values[s]

if not all(eliminate(values, s2, d2) for s2 in peers[s]):

return False

## (2) If a unit u is reduced to only one place for a value d, then put it there.

for u in units[s]:

dplaces = [s for s in u if d in values[s]]

if len(dplaces) == 0:

return False ## Contradiction: no place for this value

elif len(dplaces) == 1:

# d can only be in one place in unit; assign it there

if not assign(values, dplaces[0], d):

return False

return values

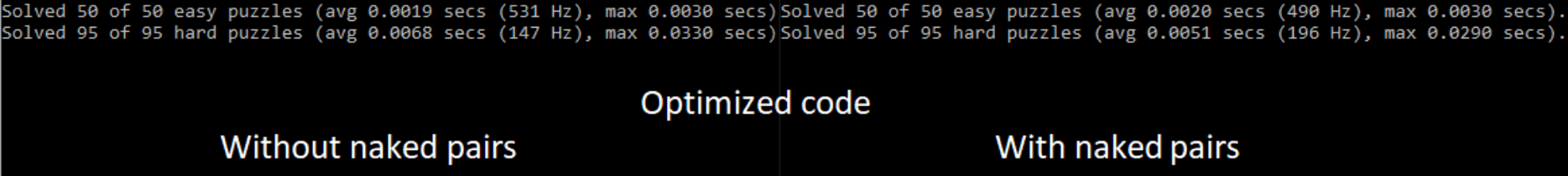

His two strategies are set up as mutually recursive functions, which always takes some effort to wrap my head around. After studying it, I rewrote it from memory, changing the data structure from a dictionary to a single list. This turned out to be even faster. Implementing the “naked pairs” strategy gives a good boost to the harder puzzles at the cost of a few milliseconds for the easier ones, which I think is worth it.

Key takeaways

Overall, my code worked and was not abysmally slow in most cases, but there was definitely room for improvement.

I had fun coding that solver and I was able to use some of the tricks I’d learned in Norvig’s CS 212 class on Udacity. The most valuable teachings came from comparing my code to Norvig’s and reflecting on what lead to my choices.

- If I want to write an efficient program, I think I should spend more time trying to break down the core mechanics involved. Simply relying on my intuition works, but most likely won’t be the best. At the core, proper understanding of the underlying mechanics will lead me to write a program that avoids unnecessary work (also known as non-pessimization). This is the reason my code runs much slower on hard puzzles.

- Trying to emulate human processes isn’t necessarily a good idea. Every design decision was based on reproducing my (allegedly) human thought process. Computers are quite different and have very different strengths. I found it funny because I’d read about this concept a couple of days before starting my solver, but still boldly went ahead with the human approach. Relevant quotes from the article:

Seeking an improvement that makes a difference in the shorter term, researchers seek to leverage their human knowledge of the domain, but the only thing that matters in the long run is the leveraging of computation.

We have to learn the bitter lesson that building in how we think we think does not work in the long run.

-

Debugging convenience most likely shouldn’t be a primary design choice.

-

If possible, find a good code to compare against!