fastai course

About a month ago I started a machine/deep learning online course, based on the book Deep Learning for Coders.

I’ve watched all currently available lessons, read half the book, completed a few tiny projects and a slightly bigger one. I’ll share my impressions on the course here and a few details on the projects.

Spoilers: it’s an amazing course. Part 2 has just been announced for next month and I’m hoping to join the live lessons.

Practicality

The online course adds “Practical” to the Deep Learning for Coders, and they mean it. The course uses a top-down approach, teaching you in barely 2 hours how to make your own image classifier using the fastai library and upload it on Hugging Face, then progressively going into more details.

Having sat through many lectures from brilliant practitioners who were helpless teachers (at best), it was refreshing to have a fantastic educator in the person of Jeremy Howard. Him and his team have not only put together a superb lecture/book combo, they have built a framework that makes it effortless to take your first steps in deep learning. This way, students have all the necessary tools when Jeremy advises to go on and experiment. I may have been unlucky, but I’ve never taken a course as whole and congruent with its philosophy as this one.

The first advantage in this approach seems rather obvious. It’s fun!

I was able to make a flag classifier after 2 lessons with minimal effort, as well as my own digit classifier for my sudoku app. Neither were made from scratch, and that’s the point. Most of the time, it makes more sense to use transfer learning (using an already trained model).

The second one is that it gets you in the right mindset for future discoveries. Indeed, many advances in the field (and others) came from experiments, with theory following the interesting results (ULMFiT, GAN… for other examples, check out Radek Osmulski’s book).

Jeremy Howard’s first advice for people starting out in deep learning is to train lots of models, so I heeded his advice and went to work on a few things.

Application

Smaller projects

The flag and digit classifier were very quick projects. I just picked up image results from DuckDuckGo search to build the flags dataset, trained a resnet18 on it using a few different combinations of image transformations and kept the best result. The digit classifier used an existing dataset from Kaggle and followed a similar process. I also trained a movie recommendation system for myself using a MovieLens dataset. Its top recommendation was Spirited Away, which I’d never watched. I really enjoyed it, it’s a solid 4/5 at least, so I consider the experiment a success!

Bird Classifier

The thing I really wanted to make though was a classifier for bird species that live in France. But at over 600 regular species, I didn’t think I could get any kind of acceptable results.

The first hurdle was to get a good dataset. Search engine results can’t be trusted here, the amount of mislabelling would be unacceptable. I ended up scraping images from a great French website using a python script. I collected at most 50 pictures for each species (the maximum number on the pages I was scraping), but usually less. This made it easy to automatically label the data, and I had reasonable confidence in its accuracy.

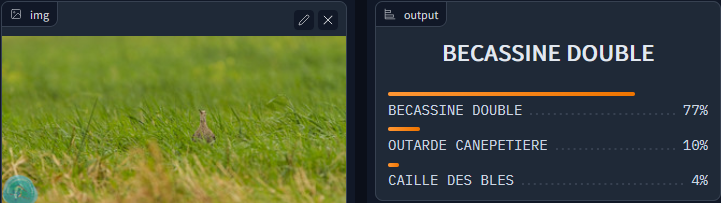

I trained a few models (ConvNeXt small from timm was the chosen one) and could only get about 77% accuracy. Error analysis revealed a couple of issues: a few species had drawings instead of pictures, or pictures of eggs. There weren’t many though, and fixing them didn’t improve the accuracy significantly. The most obvious issue was with the Great Snipe, where, surprinsingly, the model got that prediction right with fair confidence.

That didn’t seem possible, so I had a look at the training set.

Yeah… Always check your data!

Given that I was using some of the best pre-trained computer vision models, it seemed the only way forward was to get a better dataset.

Writing the python script for this new round of scraping was fun! The script had to:

- Grab the Latin species name matching the French name on oiseaux.net’s list

- Grab the matching taxonomy code used on eBird from a csv file in order to

- Access the pictures page (ranked by descending picture quality) for the corresponding species on eBird

- Click the “More results” button until it got 120 displayed pictures

- Grab the link to each picture and download it

It took a bit over 20 hours to run (I deliberately slowed the script to avoid overloading their servers), but I had an improved dataset! With about 100 pictures per species in the training set, I hoped that with data augmentation this would be enough.

And the result was worth the wait, with the current model reaching 90% accuracy. Error analysis revealed the large majority of mistakes happened on species that are very close to each other and difficult to tell apart even for experts. But in this case, the model almost always gets the family right. Given the resemblance, it’s possible some of these are mislabelled, of course.

To improve on this, I had the idea to use a first model to recognize the family, then to use a second one from a pool of specialized models to get the species. To gauge whether an improvement was possible, I attempted to train a model exclusively on all tern species and checked it’s accuracy. It didn’t do better than the model trained on all 600 species, so I abandoned the idea. Merging both datasets didn’t improve the accuracy either, with error analysis showing basically the same results.

Having enjoyed Rachel Thomas’ excellent lecture on Data Ethics, I will mention that the model is generally biased towards adult male birds, due to a higher prevalence of male specimens in pictures.

Closing thoughts

We also suggest that you iterate from end to end in your project; that is, don’t spend months fine-tuning your model, or polishing the perfect GUI, or labelling the perfect dataset… Instead, complete every step as well as you can in a reasonable amount of time, all the way to the end. […] By completing the project end to end, you will see where the trickiest bits are, and which bits make the biggest difference to the final result.

Jeremy Howard, Sylvain Gugger - Practical Deep Learning for Coders

Having stuck as close as possible to this suggestion, I can immediately see its value.

I later read Angrew Ng’s book Machine Learning Yearning and found that several of its recommendations had come naturally during the process, either because they were explicitly mentioned in the fastai course or because it just logically followed from its focus on practicality.

The course and book got me completely hooked on machine/deep learning. I can’t wait for part 2!